Bias in data analytics and machine learning represents a significant challenge that can perpetuate discrimination, cause unfair outcomes, and damage organizational credibility.

It occurs when systematic errors or prejudices in data or algorithms cause certain groups to be treated unfavorably.

Detecting and mitigating bias is essential for creating ethical, equitable, and accurate data-driven solutions.

Addressing bias requires a multifaceted approach covering data collection, model development, evaluation, and ongoing monitoring to ensure fairness and accountability throughout the analytics lifecycle.

Understanding Bias in Data and Models

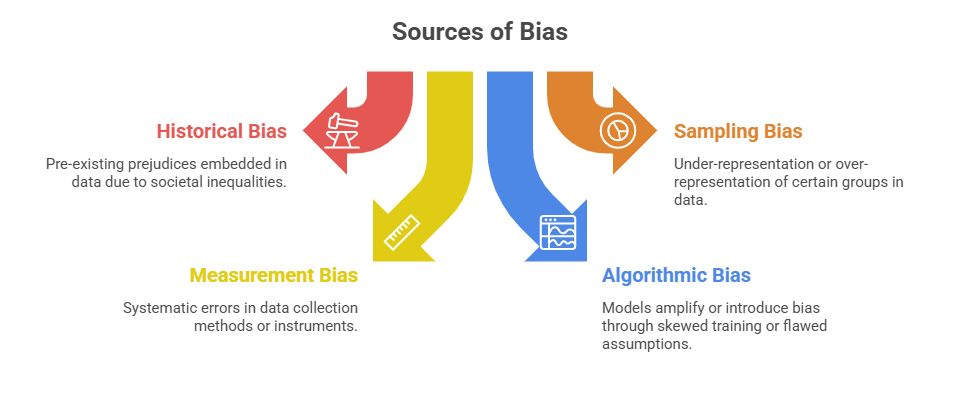

Understanding bias is critical to ensure fairness and reliability in analytics and machine learning. Common forms are pre-existing societal biases, skewed sampling, flawed measurements, and algorithmic distortions.

Types of Bias

1. Selection bias: Non-random sampling affecting representativeness.

2. Confirmation bias: Favoring data or results conforming to preconceived notions.

3. Reporting bias: Preferential publication of certain outcomes.

4. Proxy bias: Use of surrogate variables that inadvertently encode sensitive attributes.

Methods for Detecting Bias

Bias can subtly affect outcomes, so systematic detection is critical for ethical and accurate decision-making. Techniques to consider involve demographic analysis, statistical fairness measures, model inspection, subgroup testing, and ongoing monitoring.

1. Data Analysis: Examine demographic distributions and feature correlations to identify imbalances.

2. Fairness Metrics: Use statistical measures such as demographic parity, equal opportunity, and disparate impact to compare model outcomes across groups.

3. Model Inspection: Analyze feature importance and decision paths to detect discriminatory influences.

4. Testing Across Subgroups: Evaluate model performance (accuracy, error rates) separately for different demographic segments.

5. Continuous Monitoring: Implement dashboards and alerts for ongoing bias detection after deployment.

Strategies for Bias Mitigation

Fair and ethical models depend on proactive bias mitigation. Here are some strategies that address data representation, algorithmic fairness, and transparency.

1. Pre-Processing Techniques: Modify training data to balance representation, such as reweighting, oversampling minority groups, or generating synthetic data.

2. In-Processing Methods: Adjust model training by incorporating fairness constraints or adversarial debiasing to minimize bias during algorithm optimization.

3. Post-Processing Approaches: Calibrate model outputs or decisions to correct for bias, ensuring equitable outcomes.

4. Diverse Teams and Governance: Engage heterogeneous experts and establish ethical review boards to oversee bias mitigation.

5. Transparency and Explainability: Use explainable AI to understand model behaviors and communicate risks.

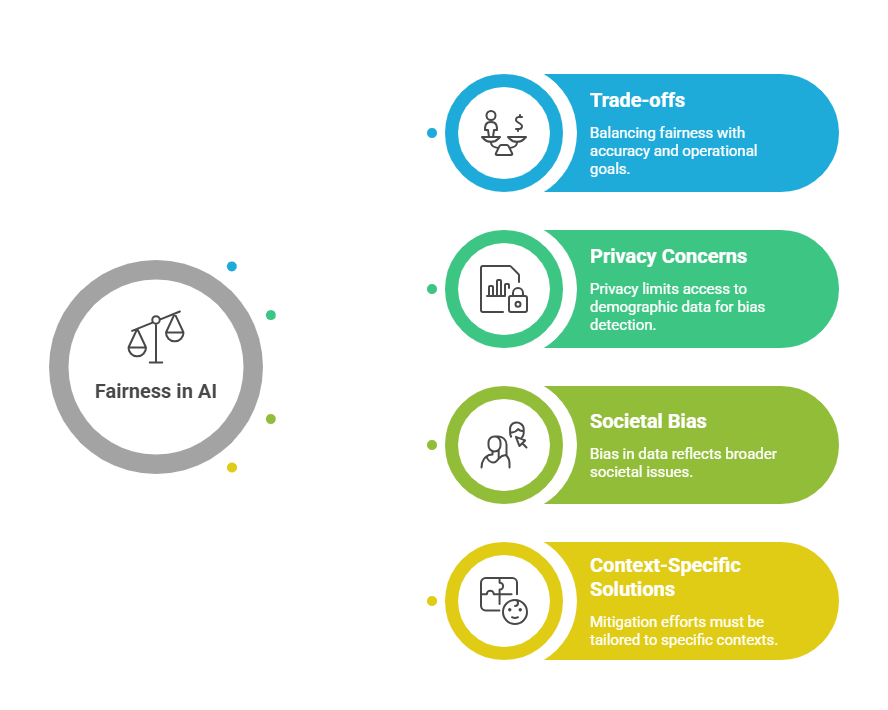

Challenges and Trade-offs

Class Sessions

Sales Campaign

We have a sales campaign on our promoted courses and products. You can purchase 1 products at a discounted price up to 15% discount.