Data quality is critical to the success of any analytics initiative. Poor data quality can lead to incorrect insights, misguided decisions, increased costs, and loss of stakeholder confidence.

Identifying data quality issues early is essential to ensure data is accurate, complete, consistent, and reliable.

Organizations must use systematic assessment frameworks to evaluate data quality, diagnose problems, and guide remediation efforts.

Understanding common data quality challenges and applying robust assessment methodologies supports trustworthy data-driven decision-making and operational excellence.

Common Data Quality Issues

Data quality issues can arise at various stages of data collection, storage, processing, and usage. Key issues include:

1. Accuracy Errors: Incorrect or outdated values that do not represent the real-world facts.

2. Incomplete Data: Missing values, records, or fields that reduce dataset comprehensiveness.

3. Inconsistency: Conflicting information recorded in different systems or formats.

4. Duplication: Multiple records representing the same entity, leading to skewed analysis.

5. Timeliness: Data that is outdated or unavailable when needed for decision-making.

6. Validity Issues: Data not conforming to required formats, types, or business rules.

7. Data Noise: Irrelevant or erroneous data points that obscure meaningful patterns.

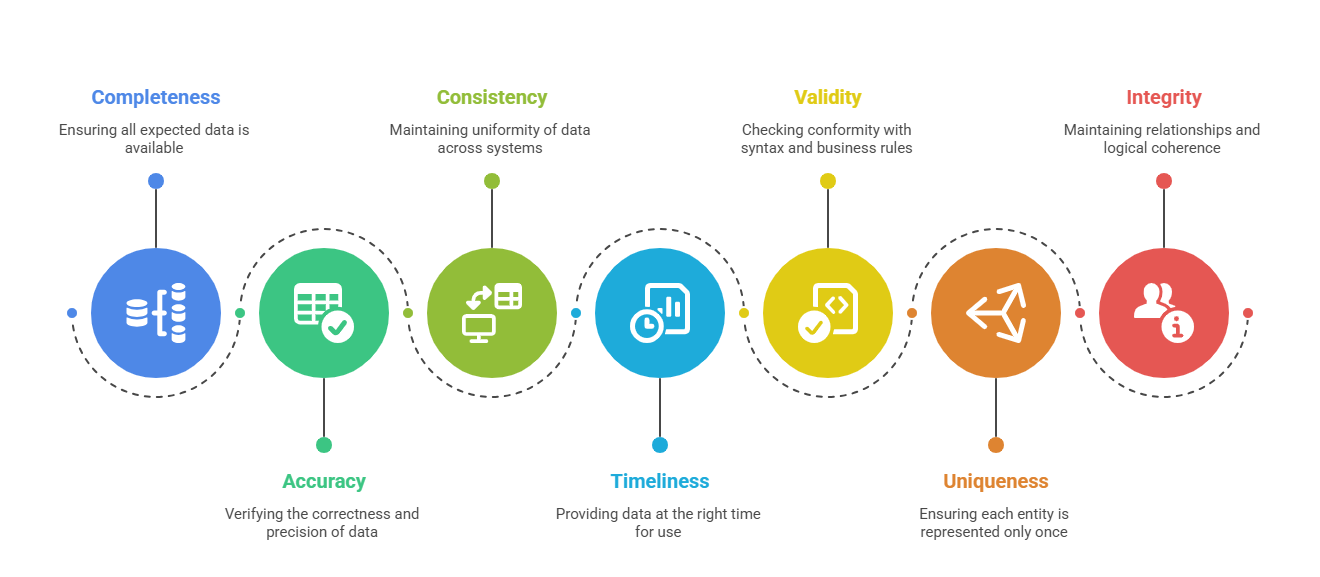

Data Quality Dimensions

To evaluate data quality effectively, organizations consider multiple dimensions:

Data Quality Assessment Frameworks

Effective data quality assessment frameworks provide structured approaches to detect and measure data quality issues. Popular frameworks include:

1. Total Data Quality Management (TDQM): An organization-wide approach encompassing data quality planning, control, and improvement. TDQM emphasizes continuous evaluation and remediation integrated into business processes.

2. Data Quality Assessment Framework (DQAF): Developed by international organizations like the IMF, DQAF provides a comprehensive methodology emphasizing data quality dimensions, metadata, validation, and quality assurance.

3. DAMADMBOK (Data Management Body of Knowledge) Framework: Offers guidelines on data quality management as part of enterprise data governance, focusing on measurement, monitoring, roles, and policies.

4. Six Sigma for Data Quality: Applies Six Sigma principles to measure data quality defects and implement process improvement strategies.

Data Quality Assessment Process

Typical steps in data quality assessment include:

1. Define Data Quality Requirements: Based on business needs and standards.

2. Data Profiling: Examine data for statistical summaries, distribution, and anomalies.

3. Issue Identification: Detect missing values, outliers, duplicates, and inconsistencies.

4. Root Cause Analysis: Investigate sources and processes causing quality problems.

5. Data Quality Metrics and Reporting: Quantify quality levels using metrics like error rates, completeness percentages, and timeliness scores.

6. Remediation and Monitoring: Cleanse data, improve processes, and continuously track quality KPIs.

Tools and Techniques

Organizations employ various tools and techniques to automate and enhance quality assessment: