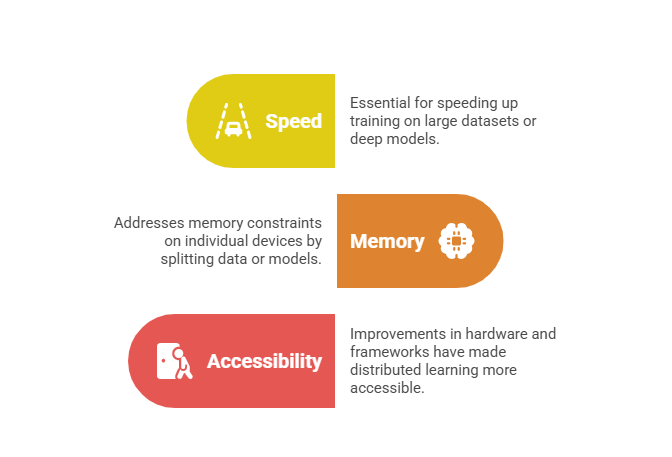

Distributed training is a foundational technique for scaling machine learning models beyond the limits of single-device computation. As datasets and models grow larger, training them requires distributing the computational load across multiple machines or devices.

This technique accelerates model convergence, enables handling very large models or datasets, and makes effective use of modern parallel computing hardware.

The two main paradigms are data parallelism and model parallelism, each with unique approaches and use cases for dividing work in distributed environments.

Distributed Training

Distributed training involves partitioning training workloads over several computational units such as GPUs, TPUs, or clusters of machines.

Successful distributed training requires synchronization, efficient communication, and workload balancing.

Data Parallelism

Data parallelism replicates the entire model across multiple devices, each processing a unique subset of the data simultaneously.

1. Each device computes gradients on its mini-batch independently.

2. Gradients are aggregated synchronously (or asynchronously) to update the shared model parameters.

3. Common gradient aggregation methods include All-Reduce algorithms optimizing communication overhead.

Advantages: Its simplicity and ease of implementation using widely available software frameworks such as PyTorch Distributed and TensorFlow MirroredStrategy.

It also scales effectively with increasing batch sizes and a growing number of devices, making it a practical and efficient choice for distributed training setups.

Challenges: Communication overhead increases with the number of devices involved, which can eventually become a bottleneck.

Additionally, the use of larger batch sizes often necessitates careful tuning of learning rates and training schedulers to maintain model performance and stability.

Model Parallelism

Model parallelism splits the model’s architecture across devices, with each processing different parts of the model.

1. Suitable for very large models that exceed single-device memory capacity.

2. Forward and backward passes traverse devices sequentially, passing intermediate data.

3. Variants include pipeline parallelism, which overlaps computation and communication by partitioning model layers across devices.

Advantages: It enables the training of extremely large models—even those on the scale of GPT-3—by distributing the computational load.

It is also highly memory-efficient because model parameters are partitioned across devices, allowing training that would otherwise exceed hardware limitations.

Challenges: Complexity involved in carefully partitioning and scheduling different parts of the model across devices.

Additionally, communication latency between devices can impact overall efficiency, and maintaining well-balanced workloads is crucial to achieving optimal performance.

Hybrid Parallelism

Hybrid parallelism merges data and model parallel strategies to capitalize on the strengths of both approaches, enabling efficient distribution of computation.

It is commonly used in large-scale deep learning systems and plays a key role in training multi-billion-parameter models with improved scalability and performance.

.png)